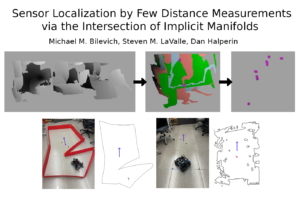

We present a general approach for determining the unknown (or uncertain) position and orientation of a sensor mounted on a robot in a known environment, using only a few distance measurements (between 2 to 6 typically), which is advantageous, among others, in sensor cost, and storage and information-communication resources. In-between the measurements, the robot can perform predetermined local motions in its workspace, which are useful for narrowing down the candidate poses of the sensor. We demonstrate our approach for planar workspaces, and show that, under mild transversality assumptions, already two measurements are sufficient to reduce the set of possible poses to a set of curves (one-dimensional objects) in the three-dimensional configuration space of the sensor \(\mathbb{R}^2\times\mathbb{S}^1\) and three or more measurements reduce the set of possible poses to a finite collection of points.

We present a general approach for determining the unknown (or uncertain) position and orientation of a sensor mounted on a robot in a known environment, using only a few distance measurements (between 2 to 6 typically), which is advantageous, among others, in sensor cost, and storage and information-communication resources. In-between the measurements, the robot can perform predetermined local motions in its workspace, which are useful for narrowing down the candidate poses of the sensor. We demonstrate our approach for planar workspaces, and show that, under mild transversality assumptions, already two measurements are sufficient to reduce the set of possible poses to a set of curves (one-dimensional objects) in the three-dimensional configuration space of the sensor \(\mathbb{R}^2\times\mathbb{S}^1\) and three or more measurements reduce the set of possible poses to a finite collection of points.

However, analytically computing these potential poses for non-trivial intermediate motions between measurements raises substantial hardships and thus we resort to numerical approximation. We reduce the localization problem to a carefully tailored procedure of intersecting two or more implicitly defined two-manifolds, which we carry out to any desired accuracy, proving guarantees on the quality of the approximation. We demonstrate the real-time effectiveness of our method even at high accuracy on various scenarios and different allowable intermediate motions. We also present experiments with a physical robot.

Our open-source software and supplementary materials are available at https://bitbucket.org/taucgl/vb-fdml-public.